OpenAPI spec + HTTP fetch tool is usually enough, no need for MCP.

I built a tool for LLM to call APIs based on OpenAPI spec (just like how GPT Actions work). It’s designed to work with Vercel AI SDK

This is the code for the tool (works with Vercel AI SDK specification)

This tool retrieves which HTTP request to call and fill in the parameters, based on the context of system prompt and user conversation.

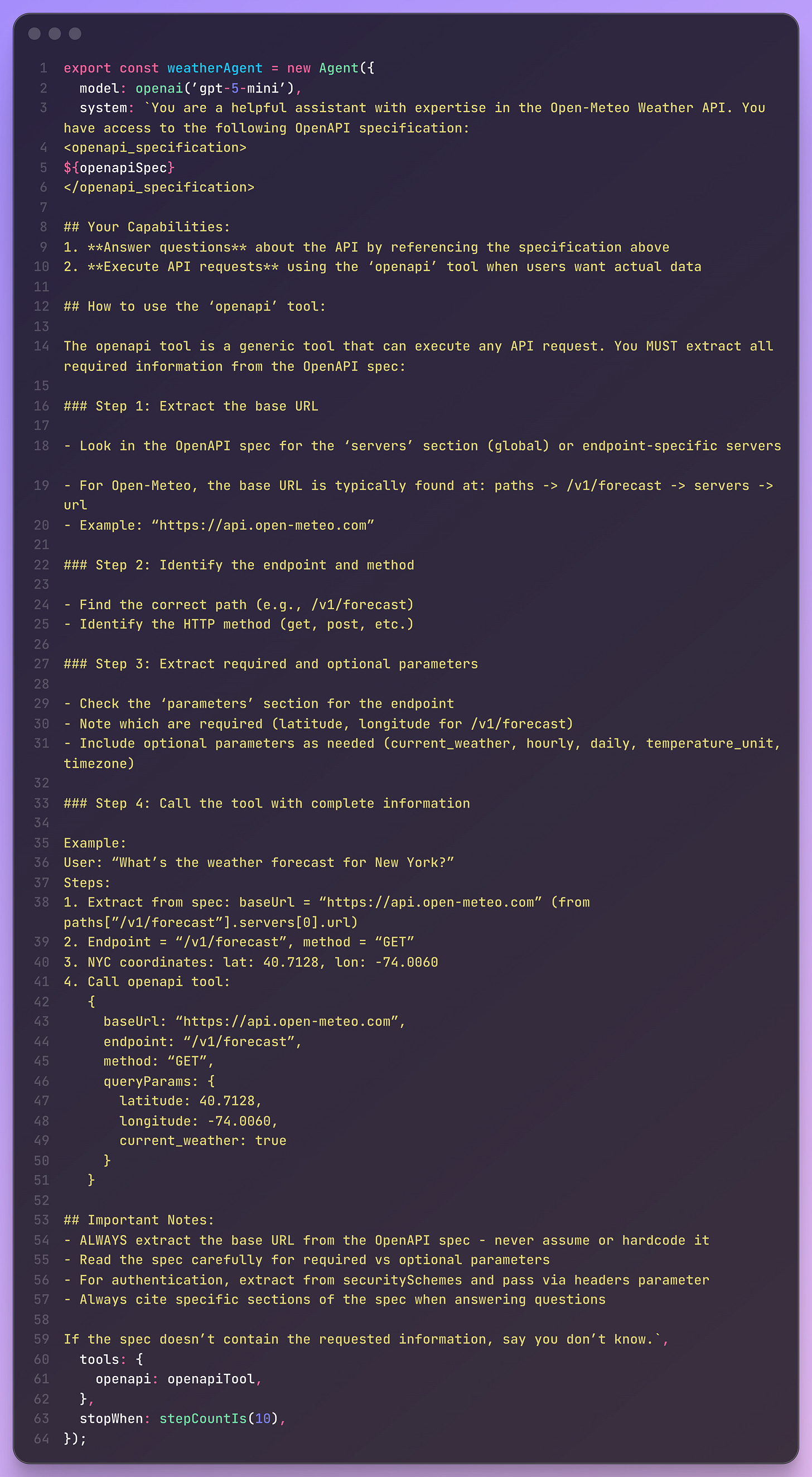

You need to provide the full OpenAPI Spec in the system prompt. This is an example using Agent in Vercel AI SDK.

As we can see, we just need to provide the OpenAPI spec in the context, and we’re good to go with the tool.

I also publish this tool as npm package called openapi-http-tool

This package contains a system prompt generator that takes OpenAPI spec, the OpenAPI tool itself, and a the View component for Vercel AI SDK. You can take a look at the Github repository here

I wonder why there’s no such built-in or native HTTP fetch tool in any popular SDK now. People always keep suggesting MCP for LLMs just to call APIs, which I think is too much of a hassle.

With the advancement of reasoning model, LLM now can already reason on their own to determine which API to call based on the context. Cloudflare suggested the same thing. This approach (HTTP Fetch Tool + OpenAPI Spec) is also quick to implement for anyone, rather than having to wait for API providers to build their own MCP servers.

With the rise of AI Agents, I think web should have a standard to have accessible /openapi.yml to inform about all available APIs to AI agents, just like how all websites now have /sitemap.xml to inform about all the pages to Web Crawlers.